ERNEST // An Emotionally Aware AI

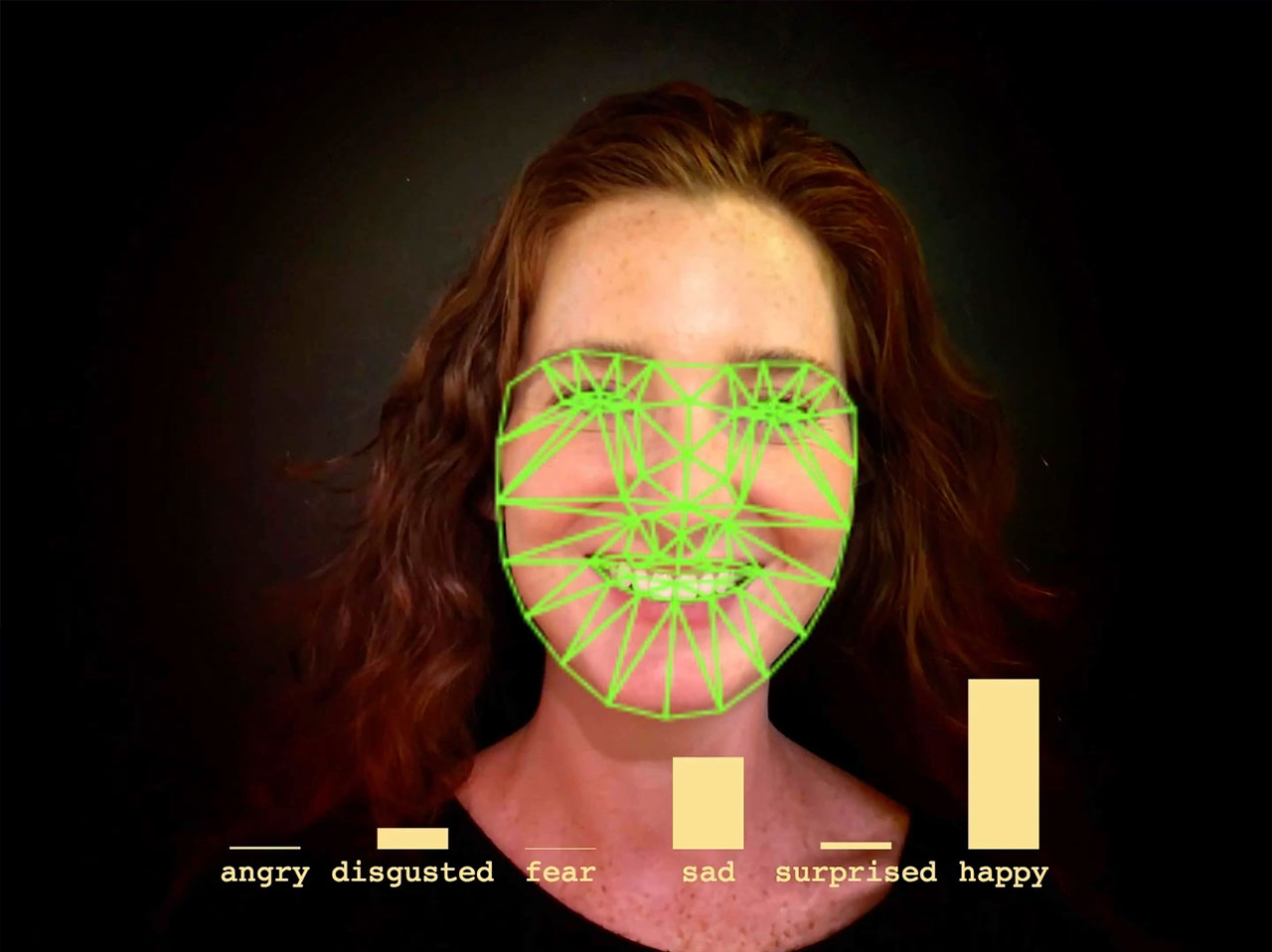

Ernest is an interactive exploration of machine learning, placing the viewer in the role of training an emotionally aware artificial intelligence designed to make people happy.

ERNEST is from an era prior to ubiquitous data collection, so it needs you to help train the Happiness Model™ so it can learn what content makes people happiest.

ERNEST uses computer vision to detect your face, and then reacts to you based on your facial expressions. He’s learning to understand emotions and how various pieces of content can affect your emotional expressions. But while we’re training a Happiness Model™ with ERNEST, how else might this technology be applied despite the good intentions of its creators?

Through ERNEST we hope to open a dialogue around the ethics of gathering the data used to train and validate models, the places bias can creep into these models, the gap between intent and real-world applications of these models, and the tensions around augmenting or automating decision making using imperfect models.

ERNEST served as the lobby installation at IDEO San Francisco during October & November 2018, and is now a semi-permanent part of our office as of July 2019.

ERNEST was exhibited at the San Francisco Art Institute Fort Mason Gallery for CODAME Intersections event on November, 2018, as part of the 50th anniversary convening of Leonardo / The International Society of Arts, Sciences and Technology.

On August 22, 2019 ERNEST was exhibited at The Exploratorium After Dark: Artificially Intelligent in San Francisco, CA.

In November/December 2019 ERNEST was shown at the Tabačka Kulturfabrik + Kasárne Kulturpark in Košice, Slovakia as part of the Art+Tech Days festival.

This project was primarily developed by myself + Matt Visco, with a bunch of support from IDEO.

Read more about ERNEST on the IDEO Design Blog

- No Beards Allowed: Exploring Bias in Facial Recognition AI

- 7 Experiments That Push the Edges of AI and Design

The ERNEST UI was inspired by ELIZA, an early terminal-based AI / chatbot.